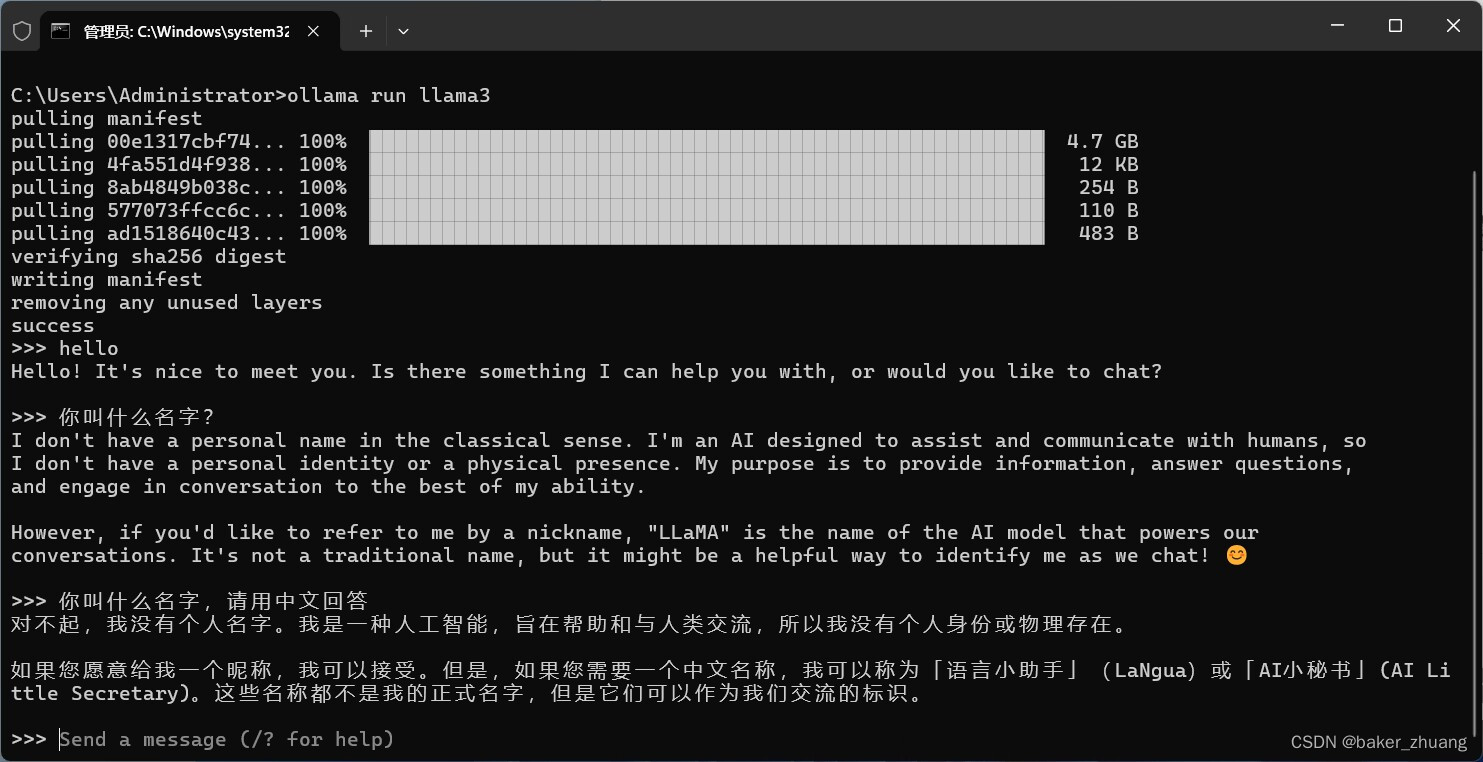

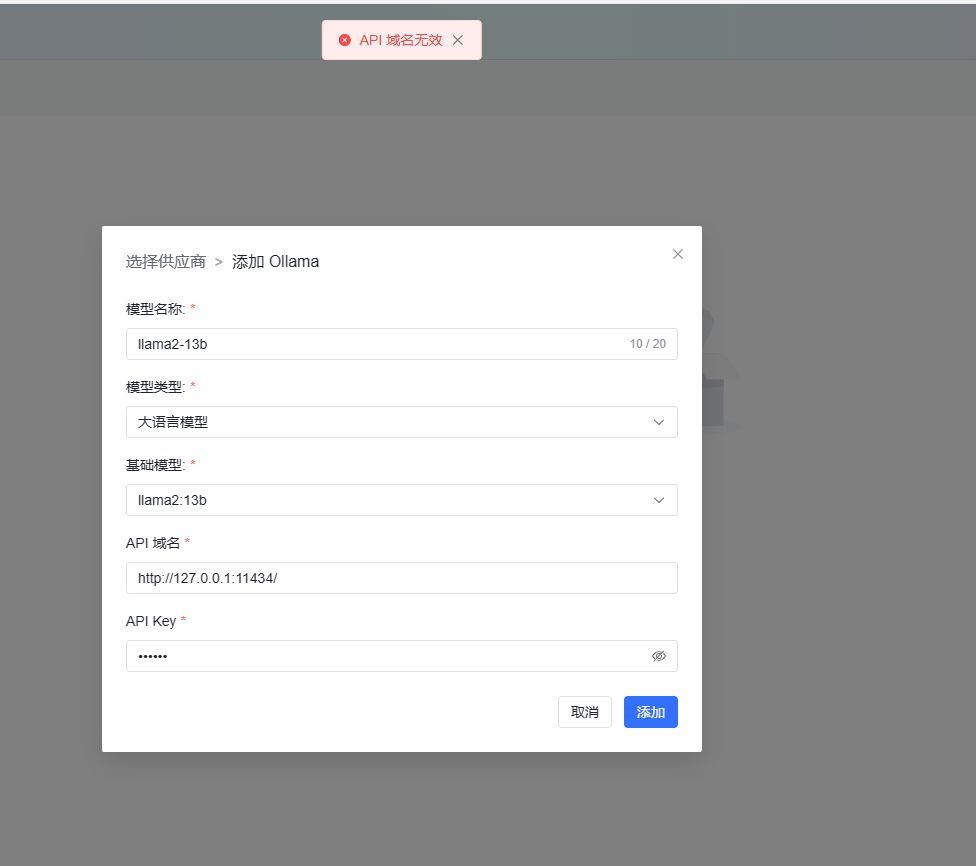

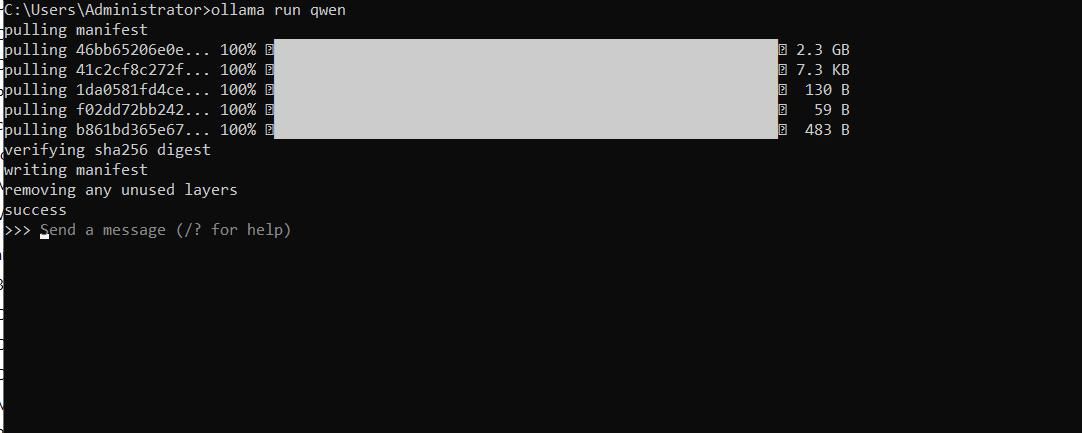

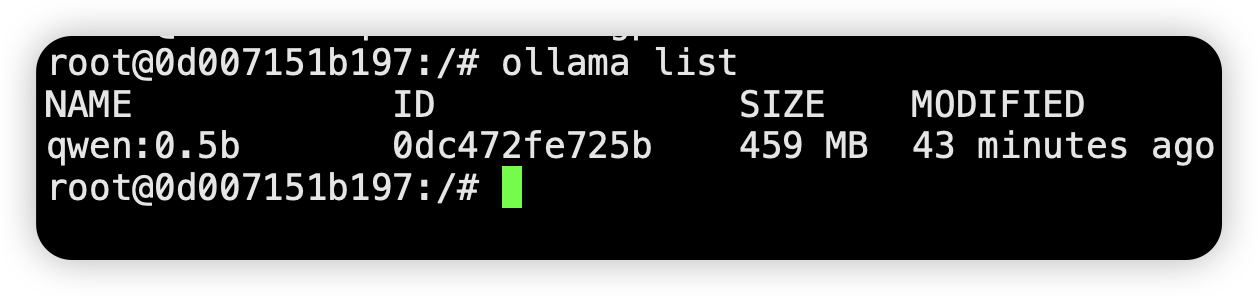

Ollama离线部署大模型 Qwen下载与ModelFile命令速成手册

Ollama GPU Support rollama Reddit

Training a model with my own data rLocalLLaMA Reddit

How to make Ollama faster with an integrated GPU rollama Reddit

How to Uninstall models rollama Reddit

Best Model to locally run in a low end GPU with 4 GB RAM right now

Ollama running on Ubuntu 2404 rollama Reddit

Options for running LLMs on laptop better than ollama Reddit

Ollama离线部署大模型 Qwen下载与ModelFile命令速成手册.

|

|

|---|